The Big Divide: Business Analytics

One recurring challenge that always come back to me is the valuation of Modeling work. This is a critical step that is seldom properly done thus, bringing some doubt into the value of the work for lack of dollar visibility. I will try to tackle it in this article.

A few weeks ago, this is the discussion I overheard about a propensity model [think about a bank that wants to know if a customer will accept an offer or not]:

- Business Leader: Ok, tell me how good your model is ...

- Modeler: Pretty good, my ROC performance is .82

- Business Leader: What does that mean?

- Modeler: This is the area under the ROC curve, it means that the model is good at discriminating the prospects that will answer from the prospects that will NOT answer

- Business Leader (impatient): I get that but can you translate into dollars?

- Modeler: That depends on many parameters such as threshold, size of the scoring set ...

This is quite revealing of the current Big Divide in the Analytics industry: Very few people talk both languages or even want to bridge the gap. The Business Leader was expecting a dollar valuation whereas the Modeler talked about the receiver performance. It gets even worse when there is little direct relationship between model performance and dollars (think about a Recommendation Engine 1),

That being said, this is not set in stone and there are good ways to communicate the performance effectively by finding an appropriate middle ground.

Let's take a dummy example: A credit card corporation buys a list of US addresses and wants to know who to send offers to. After testing responses, the Analytics dpt creates a model to predict the response of the remaining prospects. After several iterations, the model is deemed acceptable and ready to be rolled out.

In this situation, it's preferable not to communicate the ROC performance. Instead, once the model is selected, the actual $ impact can be determined at various levels of detection. This spreadsheet lay out the revenue/cost drivers on 3 types of customers (I'm happy to share the Excel, PM me).

Further, the spreadsheet presents the 3 possible scenarios:

- In the selection group:

- The customers that you were right to target (labelled "True Positive")

- Revenue: The future value of the customer once acquired

- Cost: Cost of the marketing Campaign

- The customers that you were NOT right to target (labelled "False Positive")

- Revenue: 0 by definition

- Cost: Cost of the marketing Campaign

- Not selected

- The customers you did not contacted but that would have been interested (labelled "False Negative")

- Lost Opportunity (if any?)

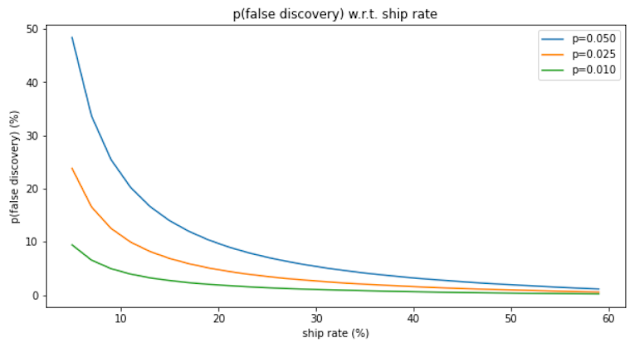

As a technical digression, it's really another representation of a ROC curve: the detection threshold as rows and the confusion matrix as columns (we ignore the false positive since they do not affect the numbers):

Note that there are a bunch of assumptions about how much it costs to send a mail, the value of an acquired customer and other components. Yet, this information can be determined without too much effort.

The conclusion is also quite manifest: 20% of the prospect list should be contacted for a top-line impact of roughly $1.6MM.

Conclusion:

Any Analytics effort is worth what its label says and you want to be the one putting the number on it. As a result, running the numbers is a good use of your time.

You can use this spreadsheet template to value our own model.

Good luck!

1: Usually, a Recommendation Engine has 2 components: Sales and Ease of Navigation (does the customer finds what he wants?). Yet critical, the latter is hard to translate into a dollar value

Comments

Post a Comment