Should you ship this feature?

Introduction

During my time at tech companies, one of the hardest tasks for my team is to guide the Product team in their decision to launch a feature. It is both very consequential and also very fraught with pitfalls. Let's take an example to ease into the topic:

You worked hard to convince leadership to measure the effect of this cool new feature and you got a nice experiment set up according to the cannons of measurement. After 4 weeks, you are seeing stat sig improvement in your primary metric and not secondary metrics affected negatively. The story is clear as day: you should tell the team to ship it, right?

The problem with shipping features

Once the results are out, the focus is put on the outcomes and leadership is incentivized to ship. As a result, little attention is given to cost side of the equation:

- Tech debt: Does this feature make the system more complex? Does it hinder long term growth by exacting a tax from any new feature? Think about Net Prevent Value of such tax in such case. This aspect is very often ignored which makes some teams saddled with some serious disadvantages which culminate in long development cycles when one could reasonably expect 2-3X faster cycles.

- Long term effect: Experiments measure short term effect on a population. It does not capture behavior that takes time to materialize (e.g. for instance, competitor's reaction, brand effect, customer word of mouth on "circumventing the feature" in the case of Fraud).

- Is it a false positive: A low p-value does not talk about false positives. Instead, it describe the probability for a change as extreme as you are observing to manifest itself under the NULL hypothesis. What's the probability of False Positive given what you are seeing?

Thinking about Tech Debt

Tech Debt is mostly shouldered by Engineering and sometimes by Data Science (model). A good partnership with Eng is necessary to size it well. Here are a few probing questions one can ask:- Is the system more complex? How so?

- Can you help me understand your development velocity before and after? Can you quantify it?

- Example: we had a single model before but with this feature, it will add another model so train time will increase by 100% which means ~20% longer development cycle

- Is the system more fragile*?

- Do you have more interfaces, more special casing, a reliance on more data pipelines/API that may go stale, ...

(*) Fragility is an interesting topic that is easy to underestimate. If you add 3 components with 1/1000 chance of failing in a day, the probability that your system will fail at least once over a year is ... 66% (yes, more likely to happen than not).

- Is the system more complex? How so?

- Can you help me understand your development velocity before and after? Can you quantify it?

- Example: we had a single model before but with this feature, it will add another model so train time will increase by 100% which means ~20% longer development cycle

- Is the system more fragile*?

- Do you have more interfaces, more special casing, a reliance on more data pipelines/API that may go stale, ...

Rethinking leadership incentive

At the very root of this, it comes down to incentive: a team is seen as successful if it ships features. So is a leader of an organization. As tech debt is difficult to quantify and takes time to insidiously take its toll on a team productivity, team leadership would often disregard it.

While Data Science cannot change the incentive structure on its own, I believe DS can play a big role highlighting these trade-offs leveraging its institutional status of "guide to decision making". Most leaders are reasonable and endeavor to make it right by their team. They understand these trade-off as long as they are brought up to them clearly and early. Tactically, folks at my level (DS manager) can force the discussion by requesting an analysis prior to the launch of the experiment (by adding that component into their experiment spec) and getting consensus with PM / Eng on a shared launch criteria.

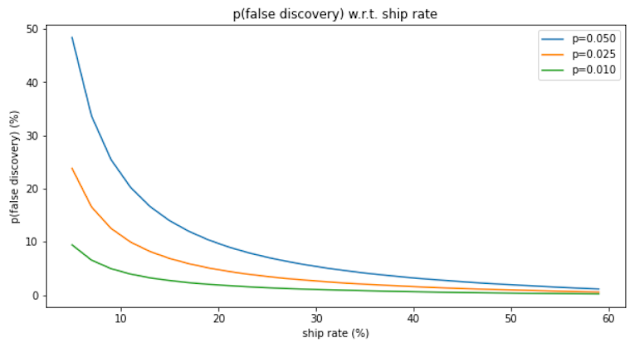

Quantifying False Discovery Rate

To get to False Positives, you will have dig deeper into the definition of power and false discovery. You can write 4 equations with 4 unknown variables (TP, FP, TN, FN):

- Power

- False discovery

- Ship rate

- SUM(TP ... FN) = 1

Note: Here, a FP is an experiment that shows stat sig improvement but was indeed not an actual improvement (False discovery).

Inverting the matrix solves the problem. In particular, you are interested in FP / (FP + TP) which gives you P(FP | shipped), that is, the False Discovery Rate:

Code: here

Good luck!

Comments

Post a Comment